Generative AIs like ChatGPT are becoming essential tools for millions of users around the world. Capable of chatting, helping, and generating content, chatbots can do a lot. However, their ability to learn and potentially memorize information from our conversations raises important privacy concerns. As the Wall Street Journal points out, there are some things they should never be trusted with.

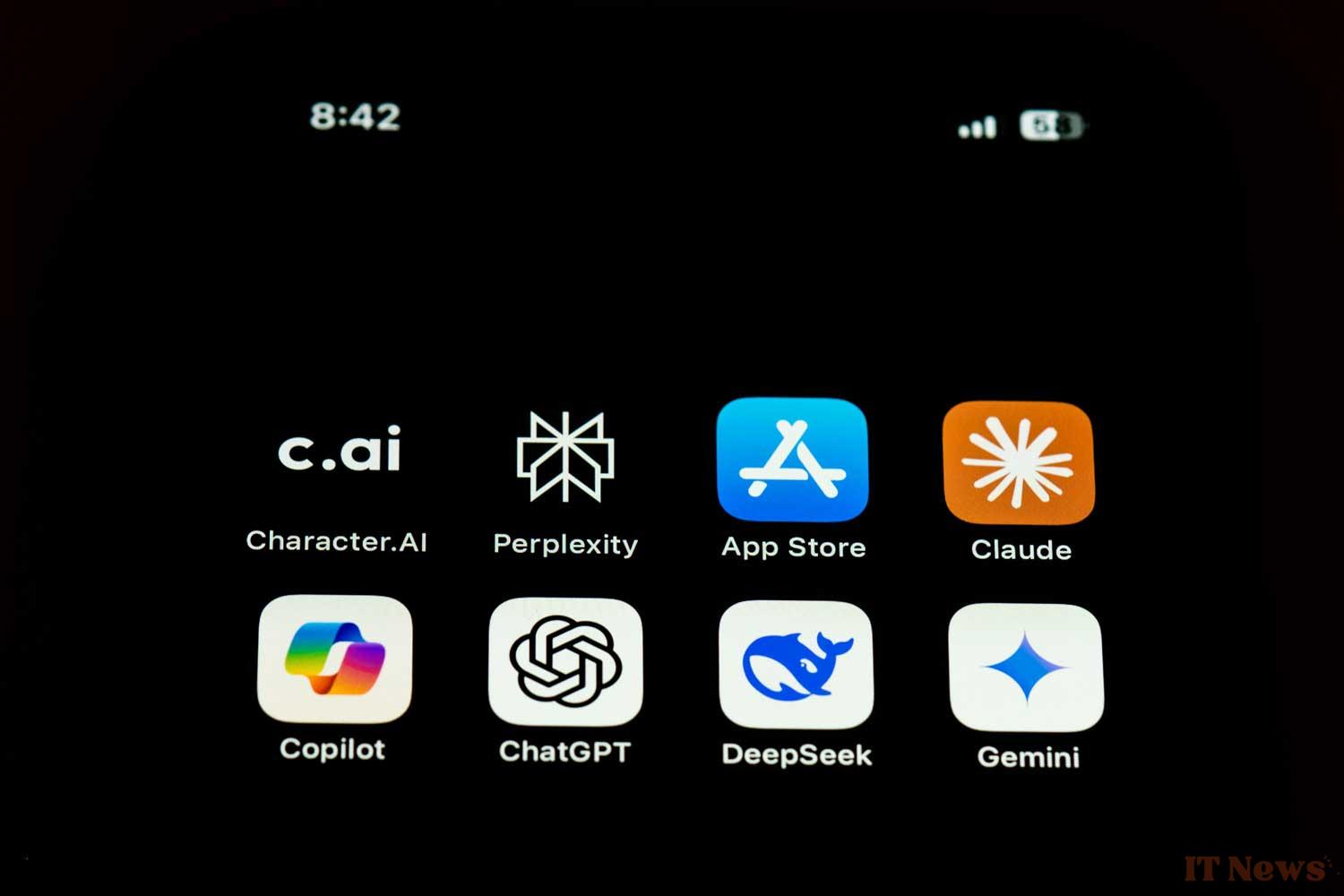

Here are the five types of information that should definitely never be shared with ChatGPT. It's important to note that this rule of caution applies equally to other popular conversational AIs, such as Google Gemini, Microsoft Copilot, Le Chat de Mistral AI, Perplexity, and Claude d’Anthropic.

Personal and Identity Information

Never disclose your social security number, ID or passport details, full postal address, or phone number. Even if filters exist, the risk of this fundamental information being leaked or exploited remains. In March 2023, an incident at OpenAI allowed some users to see excerpts from other people's conversations. The flaw was quickly fixed and the severity was limited, but it serves as a reminder of the need for constant vigilance.

Medical Results

Resist the temptation to request an analysis of your medical results. Chatbots are not subject to the same professional secrecy obligations as doctors or healthcare institutions. Disclosing sensitive medical information could lead to its misuse, for example, for targeted advertising or, in the worst case, discrimination (insurance, employment).

Sensitive Financial Data

Your account numbers, online banking credentials, or any information related to your personal finances should never be shared. These platforms are not secure environments designed to store such critical data. A leak could have dramatic consequences.

Confidential Company Data

Using ChatGPT for professional tasks is tempting, but avoid entering strategic data, trade secrets, or confidential internal information if your company does not use a private and secure enterprise version of the tool. Data shared with public versions could be logged and potentially used to train future models. Sensitive information could then be inadvertently exposed.

Passwords and Login Credentials:

It is imperative that you never entrust your passwords or logins to an AI tool. These tools are absolutely not designed for secure password management. Instead, use a dedicated password manager and always enable two-factor authentication for your important accounts.

On the other hand, asking ChatGPT for help in creating a secure password is different. The AI can generate one for you or advise you on best practices, without you having to share your existing credentials.

Why is this caution necessary?

These AIs improve through conversations. They can memorize personal details to tailor their responses to your preferences, allowing them to be more relevant. Any information shared with an AI assistant can potentially be stored, analyzed, or exposed. While AIs offer extraordinary possibilities, they shouldn't be seen as confidants.

Generative AIs are powerful tools, not confidants

When you type something into a chatbot, "you lose possession of it," explains Jennifer King, a member of the Stanford Institute for Human-Centered Artificial Intelligence (HAI). She points out that while chatbots are designed to engage in conversation, it's up to the user to define the boundaries of what they share.

0 Comments